🔄 Merging Data from Multiple Sources into One Clean Pipeline Using Google Cloud Dataflow

Complete guide to building unified data pipelines with Google Cloud Dataflow and BigQuery

Table of Contents

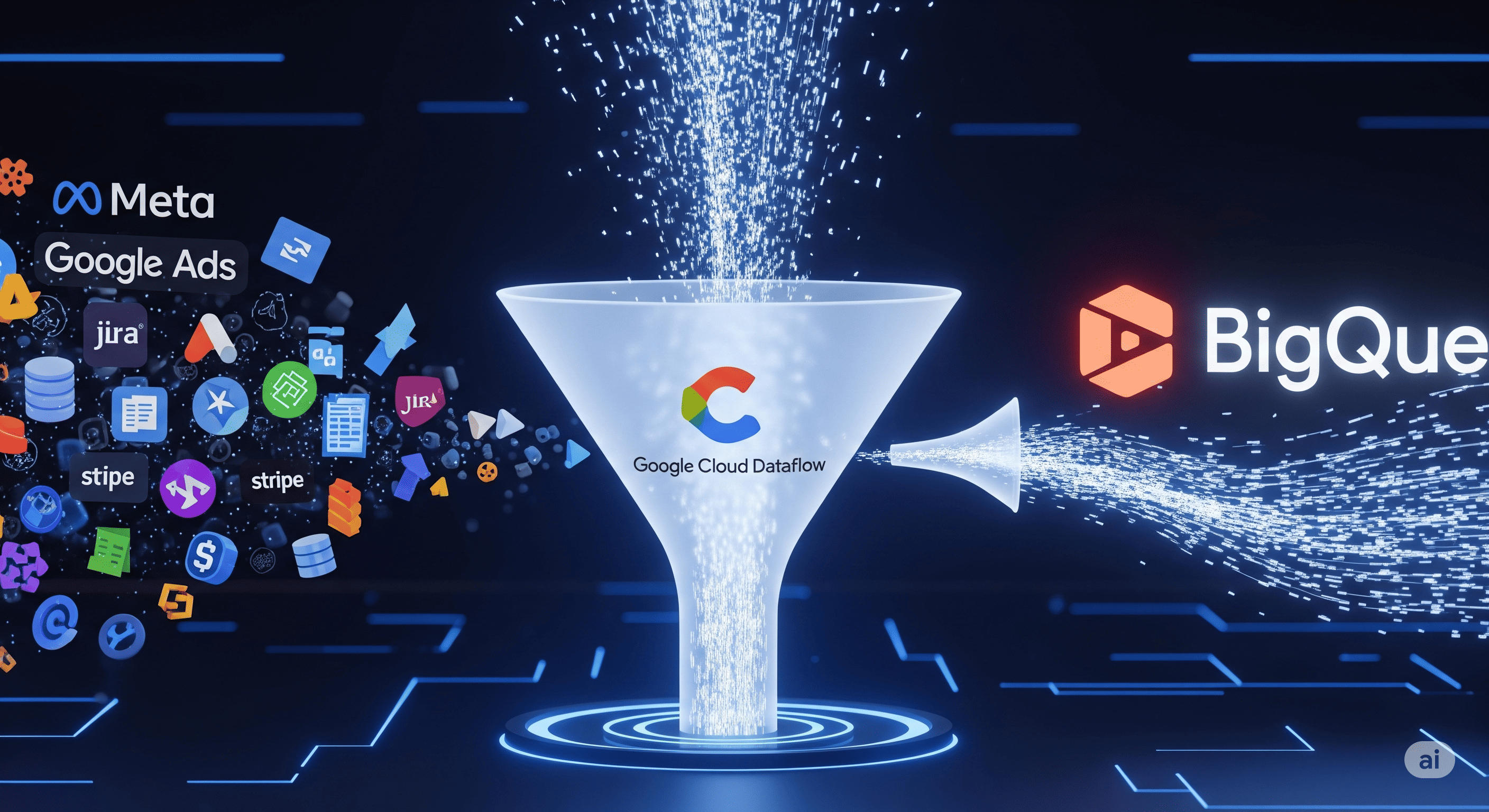

🔄 Merging Data from Multiple Sources into One Clean Pipeline Using Google Cloud Dataflow

In today's fragmented digital ecosystem, data lives everywhere:

- Meta Ads Manager

- Google Analytics 4

- Jira & Trello

- Figma, Adobe Analytics

- Stripe, HubSpot, LinkedIn Ads

The challenge?

> "We can't make decisions because our data is scattered across 10 different platforms."

At Dezoko, we specialize in building unified data pipelines -- and for one of our clients, we used Google Cloud Dataflow to merge 10+ external data sources into a single, clean dataset in BigQuery.

💡 Use Case: Unified Business Intelligence

Our client -- a multi-brand marketing agency -- wanted:

- ✅Centralized reporting across all client accounts

- ✅Clean, deduplicated, and normalized data

- ✅Near real-time updates

- ✅Unified dashboards (across ad platforms, analytics, and CRMs)

📐 Architecture Overview

+------------------+ +------------------+

| Meta API | | Google Ads API |

+------------------+ +------------------+

↓ ↓

+------------------+ +------------------+

| LinkedIn API | | Jira Webhooks |

+------------------+ +------------------+

↓ ↓

[Cloud Functions / Cloud Run]

↓

[Pub/Sub Topic]

↓

[Cloud Dataflow Job] ← handles parsing, transformation, joins

↓

[BigQuery Table] ← Final unified schema

↓

[Looker Studio Dashboards]⚙️ Tools We Used

Tool | Purpose |

|---|---|

Cloud Dataflow | Streaming ETL pipeline for transformation & joins |

Pub/Sub | Scalable event-driven ingestion layer |

Cloud Functions | Lightweight API fetchers from sources |

BigQuery | Central storage, analytics, and dashboard source |

Cloud Scheduler | Triggers daily fetches or webhook verifications |

Secret Manager | API tokens, auth credentials |

🔄 Step-by-Step: Our Data Merge Strategy

1️⃣ Data Collection via API/Webhooks

We set up:

- Pull-based fetchers (e.g., Meta, LinkedIn, Google Ads)

- Webhooks for push-based sources (e.g., Jira, Trello)

- Cron jobs for periodic data where APIs lacked webhooks

- All payloads pushed to Pub/Sub

2️⃣ Streaming with Cloud Dataflow

We built a streaming pipeline with:

- Source: Pub/Sub subscription

- Transforms:

- Schema mapping to internal standard

- Deduplication using unique keys

- Data enrichment (adding campaign names, client IDs)

- Error handling (dead-letter queues + logs)

- Sink: Merged data into BigQuery unified table

Sample Join Example:

- Match Meta Ad data with Google Analytics session

- Combine Jira ticket tags with Adobe user behavior

- Join Stripe transactions with campaign IDs

3️⃣ Normalization & Schema Enforcement

Raw Field (Meta) | Normalized Field |

|---|---|

`campaign_name` | `campaign` |

`impressions` | `ad_impressions` |

`date_start` | `event_date` |

`client_id` | `customer_id` |

We created a single standard schema to support cross-source queries.

4️⃣ Real-Time Monitoring + Observability

- Cloud Logging: for fetch failures and API rate issues

- BigQuery Audit Logs: track each load

- Pub/Sub dead-letter: route errors to Firestore for retry

- Slack alerts for pipeline failure or delays

📈 Outcome for the Client

Metric | Before | After |

|---|---|---|

Data Sources Connected | 2 (manually) | 10+ (automated) |

Update Frequency | Weekly manual export | Realtime / hourly |

Reporting Time | ~6 hrs/week | 0 hrs |

Errors / Gaps | High | Monitored & alertable |

Decision Confidence | Low | High -- unified view |

🔐 Enterprise-Ready Features We Implemented

- ✅Token rotation with Secret Manager

- ✅Per-client data partitioning in BigQuery

- ✅IAM roles for audit-level access

- ✅Multi-region support for latency reduction

- ✅End-to-end encryption + logging

💬 What the Client Said

> "We now see everything -- ads, product behavior, and campaigns -- in one place. Your team made our data useful again."

> -- VP of Marketing Analytics

> "Before, we had tools. Now, we have insights."

> -- CEO, Martech SaaS

📞 Want to Merge Your Disconnected Data into Google Cloud?

We help you:

- ✅Ingest from any API, webhook, or file

- ✅Clean + map data with Dataflow

- ✅Store in BigQuery, Firestore, or Cloud Storage

- ✅Monitor + visualize with Looker or custom dashboards

- ✅Automate 100% of the pipeline